AI Infrastructure

Powering the Future

🌁 Fog City Tech News

AI, Data, GTM, Partnerships & Revenue Growth

This Week’s Focus: AI Infra Summit 4

⚡ We’re Still in the Early Days of AI Infrastructure Deployment

The AI Infrastructure Summit—held in San Francisco on Friday, November 7, 2025—brought together the builders, operators, and innovators powering AI infrastructure. Sponsors included AMD, Crusoe, Supermicro, WEKA. The event was organized by IGNITE GTM, an AI infrastructure strategy consultancy and event execution company that gathered over 650+ AI leaders.

My Biggest Takeaway:

There is an urgent need to move beyond experimental AI projects and start to focus on building scalable, efficient, and production-ready full-stack AI infrastructure that delivers tangible business value. Wall Street short sellers are already sounding the alarm on some AI stocks, namely Palantir and Nvidia.

☕ Starting Strong: Women x AI Breakfast Panel

I kicked off the day with a breakfast panel sponsored by Women x AI which included leading executives from Google, Lambda, Capstraint and Gathid all hosted by Lauren Vaccarello, CMO of WEKA, who noted, “we are in minute one of day one” of AI infrastructure deployment. Starting the summit with this panel set the tone for the day—highlighting not just the technical infrastructure challenges, but the diverse leadership building the AI ecosystem.

🎤 🔥 Five Critical Trends Reshaping AI Infrastructure

Megawatt Data Centers & Liquid Cooling: The horizon of megawatt-scale racks is approaching, with liquid cooling as vital enabling technology. Currently, only 1-2% of data centers have infrastructure to support such high-power densities. Megawatt sidecar solutions and optimized liquid cooling are crucial for next-generation AI data centers to address power delivery and heat dissipation challenges. We’re very close to 20-megawatt data centers becoming reality.

GPU-as-a-Service & Tokenomics: Subscription models for GPU compute are gaining traction, measured in “tokens per GPU second.” Token economics incorporate input/output costs plus infrastructure overhead, enabling precise pricing and hedging strategies for AI workloads.

Sovereign Cloud Expansion: This addresses strict data residency and operational autonomy requirements for regulated industries and public sectors with data sovereignty needs—opening massive European markets for AI deployment. AWS is advancing its European Sovereign Cloud initiative, with a fully independent region planned in Brandenburg, Germany, by end of 2025. Other major cloud providers like Microsoft, Google, and SAP are also expanding their offerings with new regional data centers, enhanced security controls, and flexible deployment options, such as on-site hosting, to meet these growing needs across different industries and governments.

Accelerated Product Cycles: Product road mapping has shifted from traditional six-month cycles to biweekly updates, reflecting the unprecedented pace of AI infrastructure development according to Thomas Jorgensen, Sr. Director, Technology Enablement, Supermicro. Hardware depreciation rates vary significantly: CPU/server/storage depreciates over 3-5 years, while GPUs like Nvidia’s A100 have much longer schedules—affecting financial planning and asset management strategies.

Edge AI Challenges: Qualcomm’s Dr. Vinesh Sukumar, VP, AI, emphasized the technical complexity of edge AI inferencing due to latency, power, and compute constraints at the network edge—a crucial frontier as AI moves beyond hyperscale data centers into distributed environments.

💰 The Scale of Investment is Staggering

$7 TRILLION in global AI infrastructure investment needed by 2030

According to McKinsey research shared at the summit, nearly $7 trillion in global capital expenditure will be required for AI infrastructure by 2030, with $5.2 trillion dedicated to AI-ready data centers alone. This isn’t speculative investment, it’s the fundamental economic shift required to power AI at scale.

Major tech companies like Microsoft, Google, Meta, and AWS are investing hundreds of billions through 2025–2028 to rapidly expand AI compute infrastructure, including facilities over 100 MW. The conversation has moved from “if” to “how fast.”

🏢 AI-ready data centers: 20-30 megawatts per facility (vs. traditional 5-10 MW)

This fundamental economic reality—validated by McKinsey’s $7 trillion forecast—demonstrates that AI infrastructure investment represents structural transformation, not speculative excess.

🌐 The AI Circular Economy

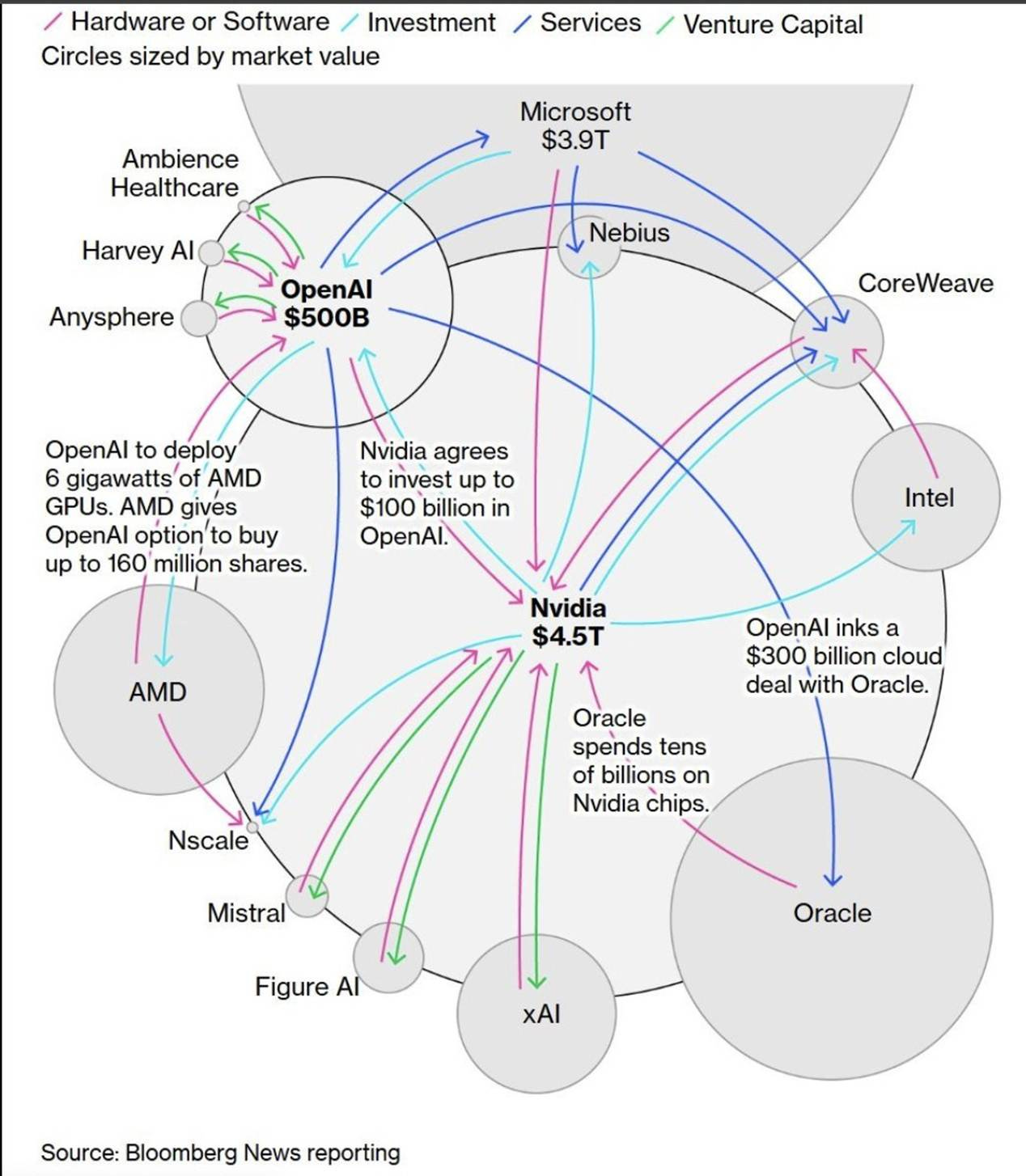

Devansh Devansh, Founder, Chocolate Milk Cult Leader & Machine Learning Engineer, explained the critical difference between a Ponzi scheme, a bubble, and AI reality. For some, the AI circular economy is a bubble which he strongly dismissed and emphasized is lazy thinking. He noted that, “we’re not in a bubble—we’re witnessing hardware lock-in and sustained demand that represents a fundamental, long-term economic shift”.

Conversely, Bloomberg published an article on October 10th discussing AI investment circularity and the risk of a financial bubble if any of the key players falter. The accompanying graphic went viral, receiving over 4 million views on X, not including widespread reposts.

Irrespective of valuations, whoever controls AI infrastructure will define the next generation of computing, economic opportunity, and geopolitical power.

💡 Real-World AI Deployments at Scale

Beyond infrastructure theory, the summit highlighted tangible AI deployments demonstrating production readiness.

LinkedIn’s AI Agent: With 1.2 billion members and 70 million companies, LinkedIn has successfully launched its first AI agent operating on the Flyte OS—demonstrating AI’s impact on massive user bases in production environments. This proves that AI infrastructure is powering real applications serving billions of users today.

🚀 What This Means for Partnerships & GTM

For partnership and GTM leaders, the AI infrastructure landscape presents unprecedented opportunities:

New Partnership Categories Emerging: GPU-as-a-Service providers, sovereign cloud operators, liquid cooling innovators, and edge AI specialists represent entirely new partnership ecosystems. Companies building AI applications need infrastructure partners; infrastructure providers need distribution and go-to-market partnerships. This creates opportunities for channel programs, strategic alliances, and ecosystem development.

Tokenomics Creates New Business Models: The shift to token-based pricing for GPU compute enables flexible consumption models, creating opportunities for channel partnerships, reseller programs, and managed service providers to package and distribute AI infrastructure. Carmen Li, CEO, Silicon Data is building the world’s first GPU compute risk management platform. She presented the financial modeling for AI partnership models which is built upon a cost framework that includes direct input/output token costs and indirect infrastructure overhead.

Geographic Expansion Through Sovereign Clouds: Sovereign cloud regions address data governance requirements, opening European and international markets for AI companies that partner with compliant infrastructure providers. This represents a massive GTM unlock for B2B SaaS and AI companies previously blocked by regulatory constraints.

🔮 The Bottom Line

The AI Infra Summit reinforced what many of us in the Bay Area ecosystem already sense: we are witnessing the most significant technology infrastructure buildout since the internet itself. The $7 trillion investment forecast isn’t speculation—it’s the minimum required to power AI at scale.

For partnership professionals, this creates a generational opportunity. New categories are being created, business models are being invented, and geographic markets are opening. The companies that move fastest to build strategic infrastructure partnerships—whether with GPU providers, data center operators, semiconductor companies, or sovereign cloud operators—will capture disproportionate value.

The urgent need identified at the summit is clear: move beyond experimental AI projects and focus on building scalable, efficient, production-ready full-stack AI infrastructure that delivers tangible business value. For those of us in partnerships and GTM, this means building the ecosystem relationships, channel programs, and strategic alliances that will enable AI’s production deployment at enterprise scale.

The foundation is being laid. And San Francisco remains at the epicenter of this transformation.

Shout out to IGNITE GTM for organizing this incredible event and inviting me. I was there for 12 hours—it was hard to leave with all the excitement over AI Infrastructure.

Click links below for information

(Me in center with Persian Founders)

Fog City Tech News is published weekly, providing insights into AI, Data, Partnerships, Revenue Growth & GTM.

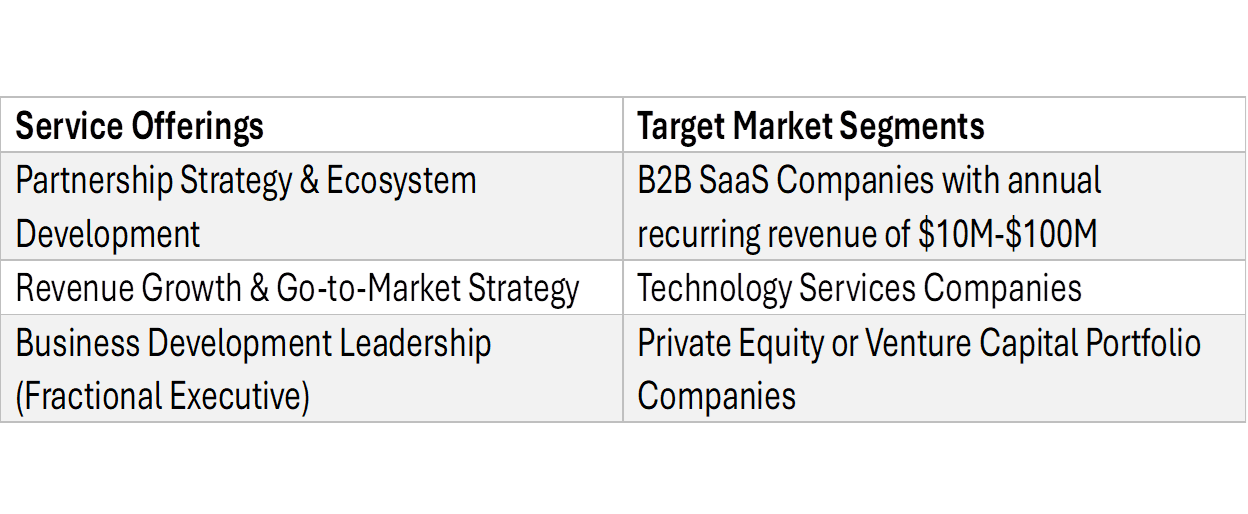

If you are a tech company seeking my expertise, reach out. Referrals appreciated.

This month I am launching a complimentary AI Assessment Discovery Call. DM me for info.

Referrals appreciated!

Kind regards,

Vesna Arsic

Founder, Fog City Strategic Advisory

San Francisco, California

LinkedIn

If you wish to continue receiving my weekly newsletter, please reply to this email so that it does not go